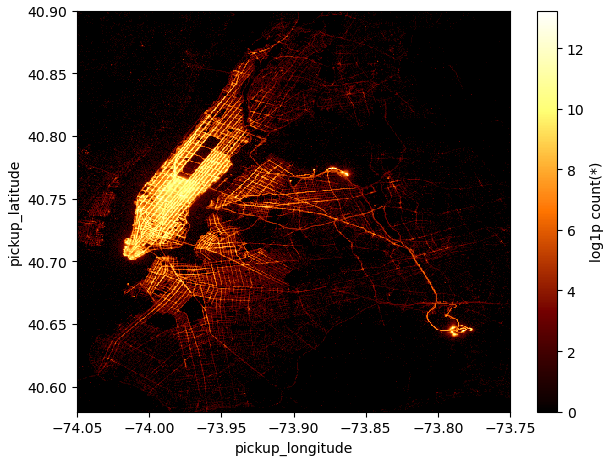

Lightning-fast big data exploration with vaex

vaex is a powerful DataFrame library that allows us to visualise, explore, and statistically process huge data files that do not fit into memory in the blink of an eye. I’m coming back to this fantastic library and in this notebook I give just a brief intro of what it can do.

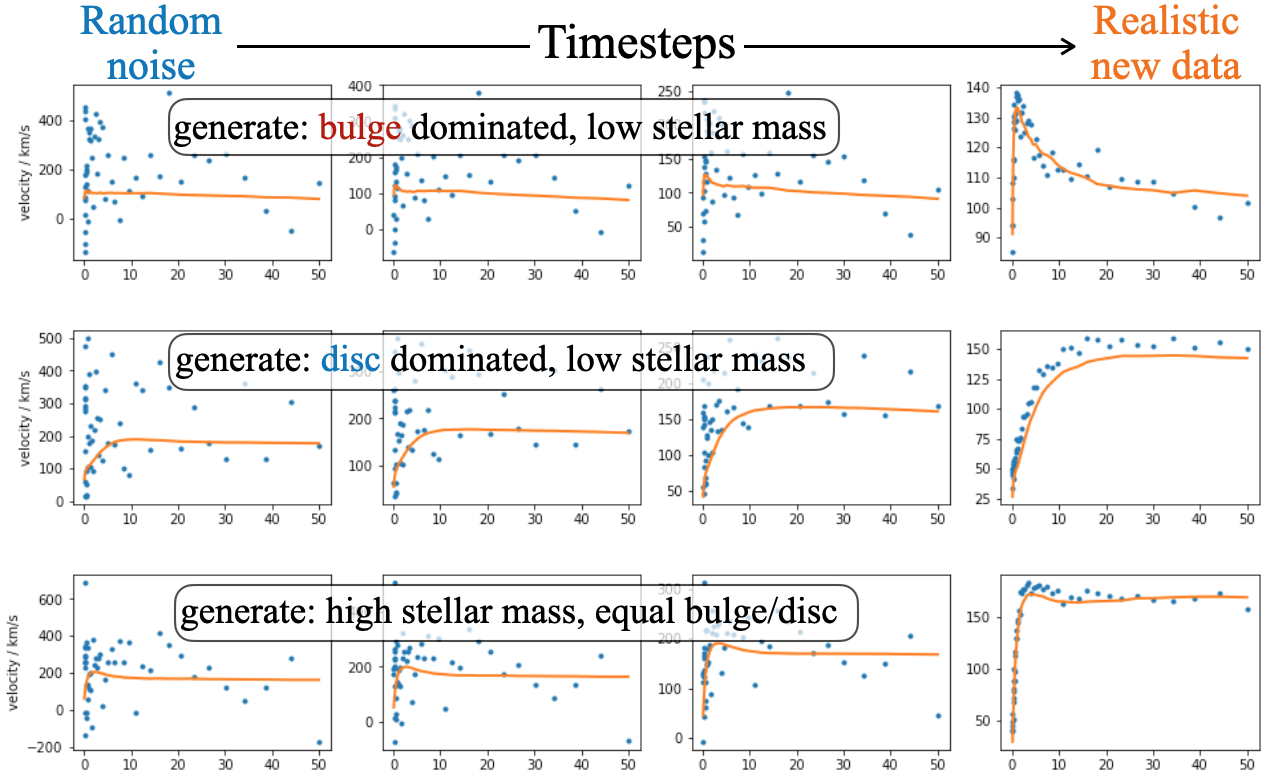

Conditioning diffusion models

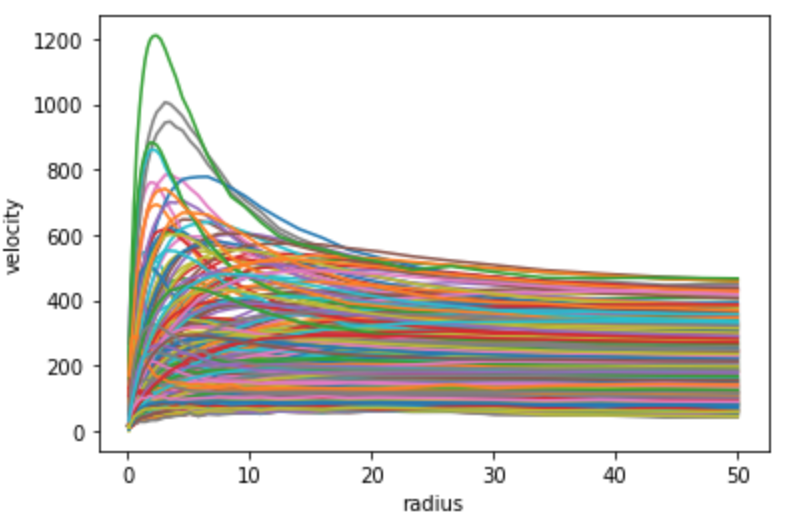

After the intro to diffusion models, let’s take them to the next step by introducing conditioning in these simplified models and let’s do it from scratch. We will build a model able to generate rotation curves with peculiar features that we can specify upfront.

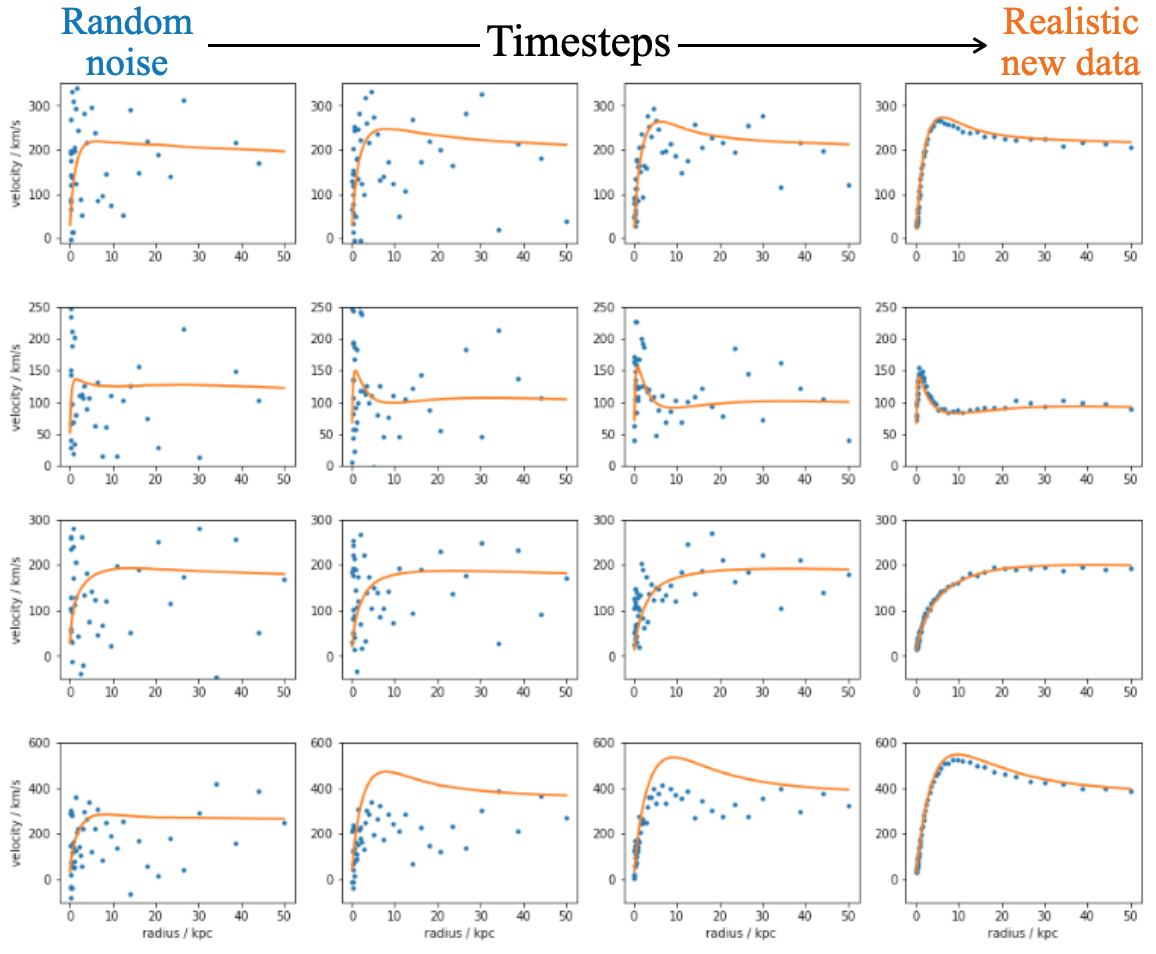

Intro to diffusion models from scratch

This notebook is an introduction to diffusion models that are currently state-of-the-art in computer vision and in image generation. I build from scratch a diffusion model able to generate realistic rotation curves starting from purely random noise.

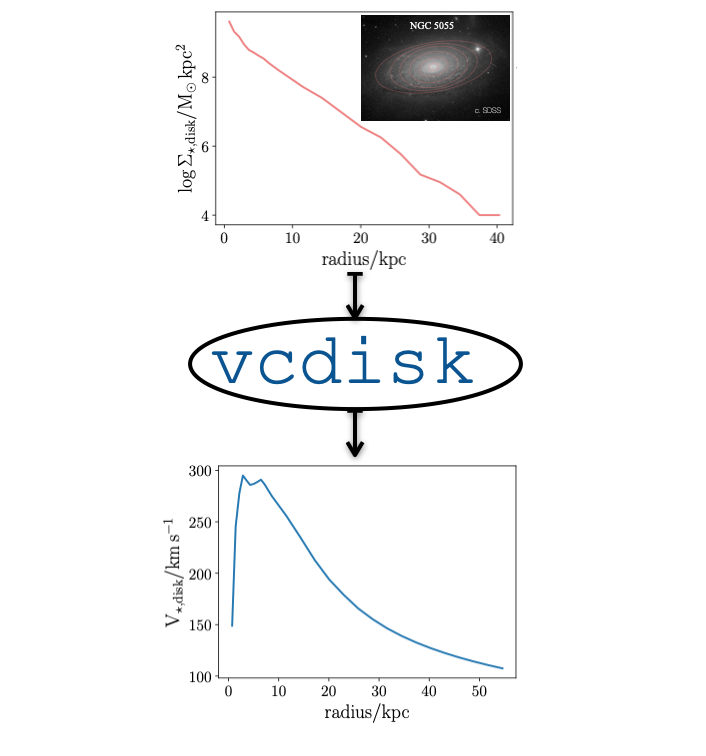

vcdisk: a python package for galaxy rotation curves

This notebook presents a minimal python package that I wrote to solve Poisson’s equation in galactic disks. vcdisk is an handy toolbox of essential functions to compute the circular velocity of thick disks and flattened bulges of arbitrary surface density.

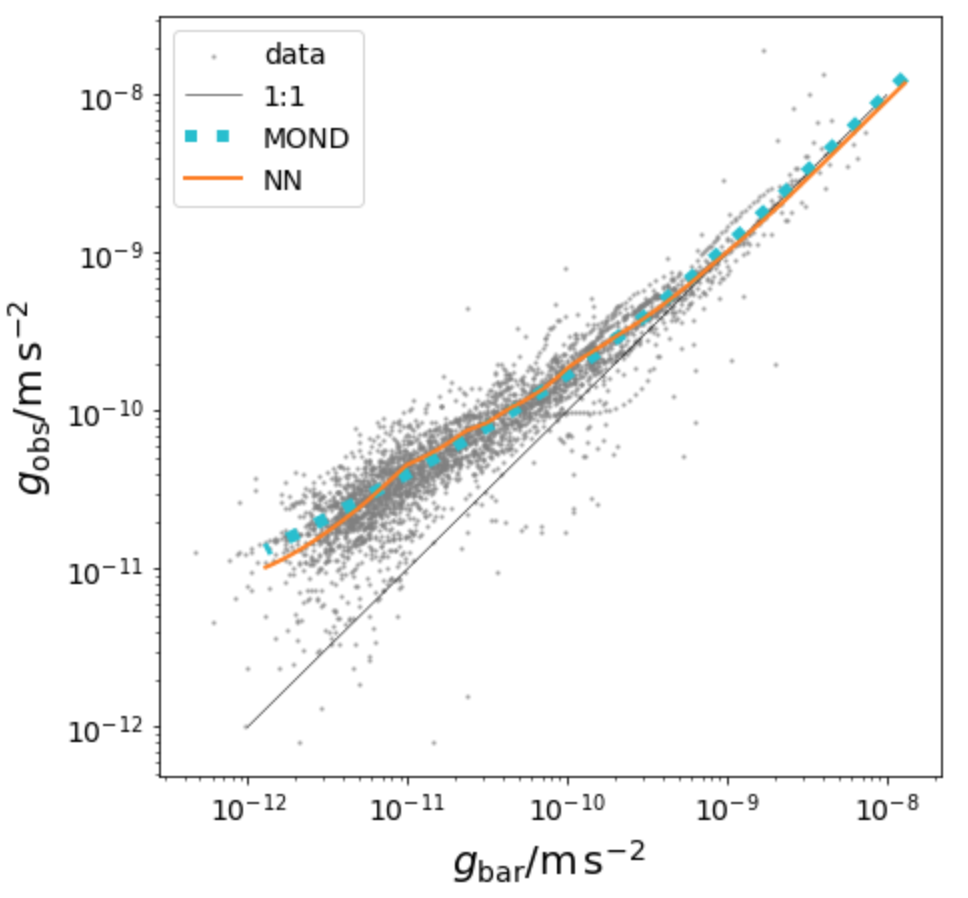

A simple neural network to predict galaxy rotation curves from photometry

Designing a simple feedforward neural network, made with just linear layers, activations, and batch normalization, to predict rotation curves from galaxy surface brightness profiles. Comparison to the MOND empirical law.

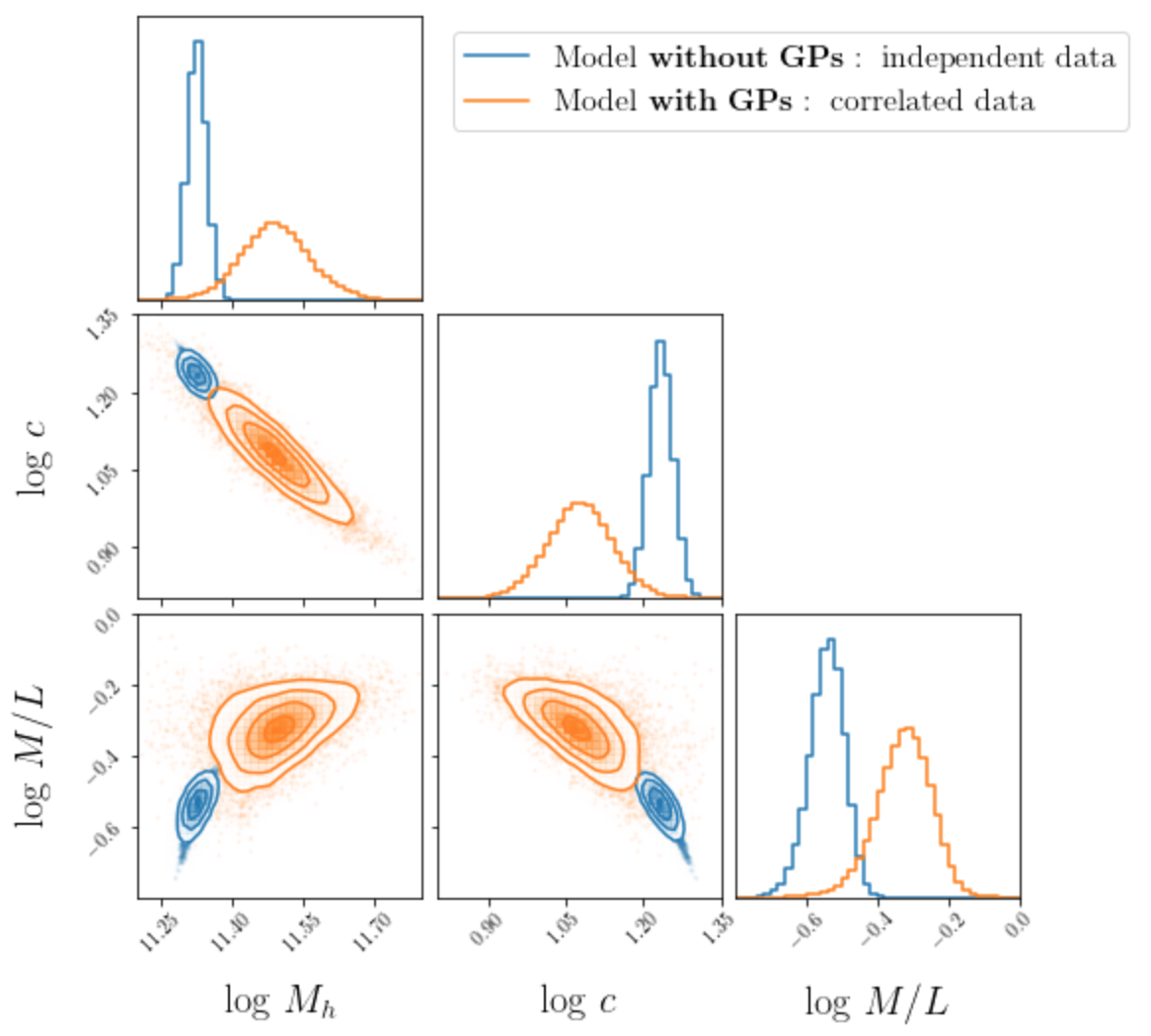

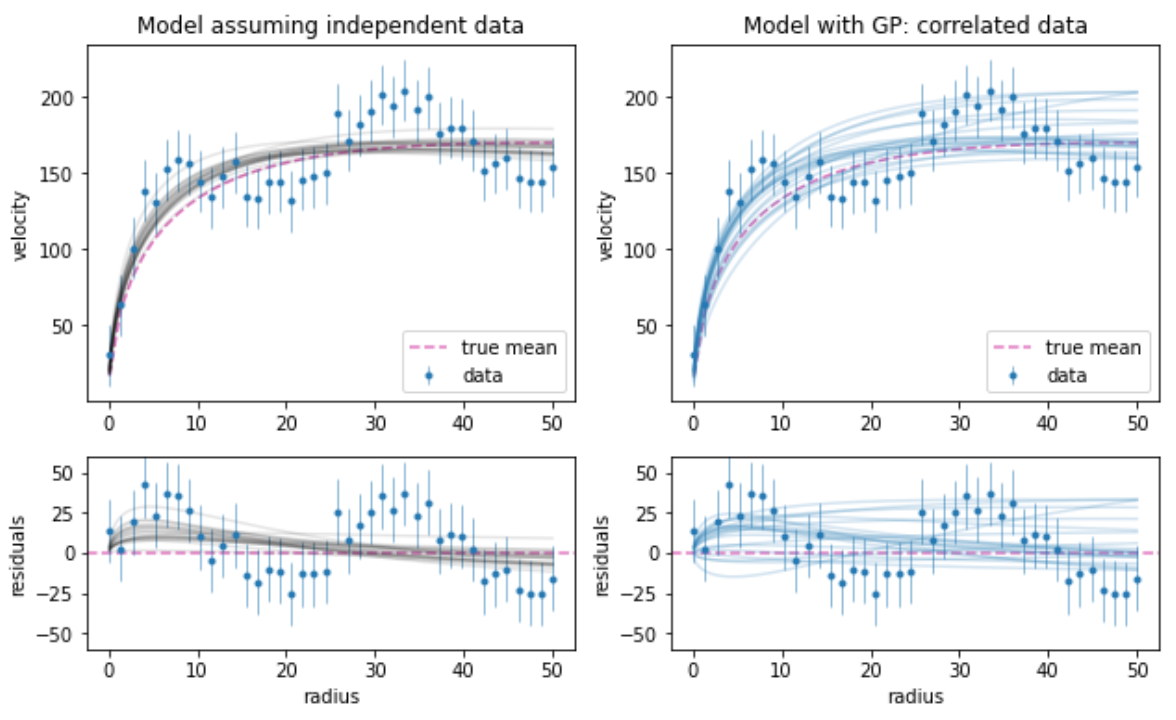

Rotation curve decompositions with Gaussian Processes: taking into account data correlations leads to unbiased results

Correlations between velocity measurements in disk galaxy rotation curves are usually neglected when fitting dynamical models. This notebook, which accompanies the paper Posti (2022), Res. Notes AAS, 6, 233, shows how data correlations can be taken into account in rotation curve decompositions using Gaussian Processes.

Gaussian Processes: modelling correlated noise in a dataset

Independent datapoints are most often just a convenient idealisation which can even hamper your model inference at times and bias your results. Learn how to embrace the reality of correlated noise in the data and marginalize the parameter posteriors with Gaussian Processes.

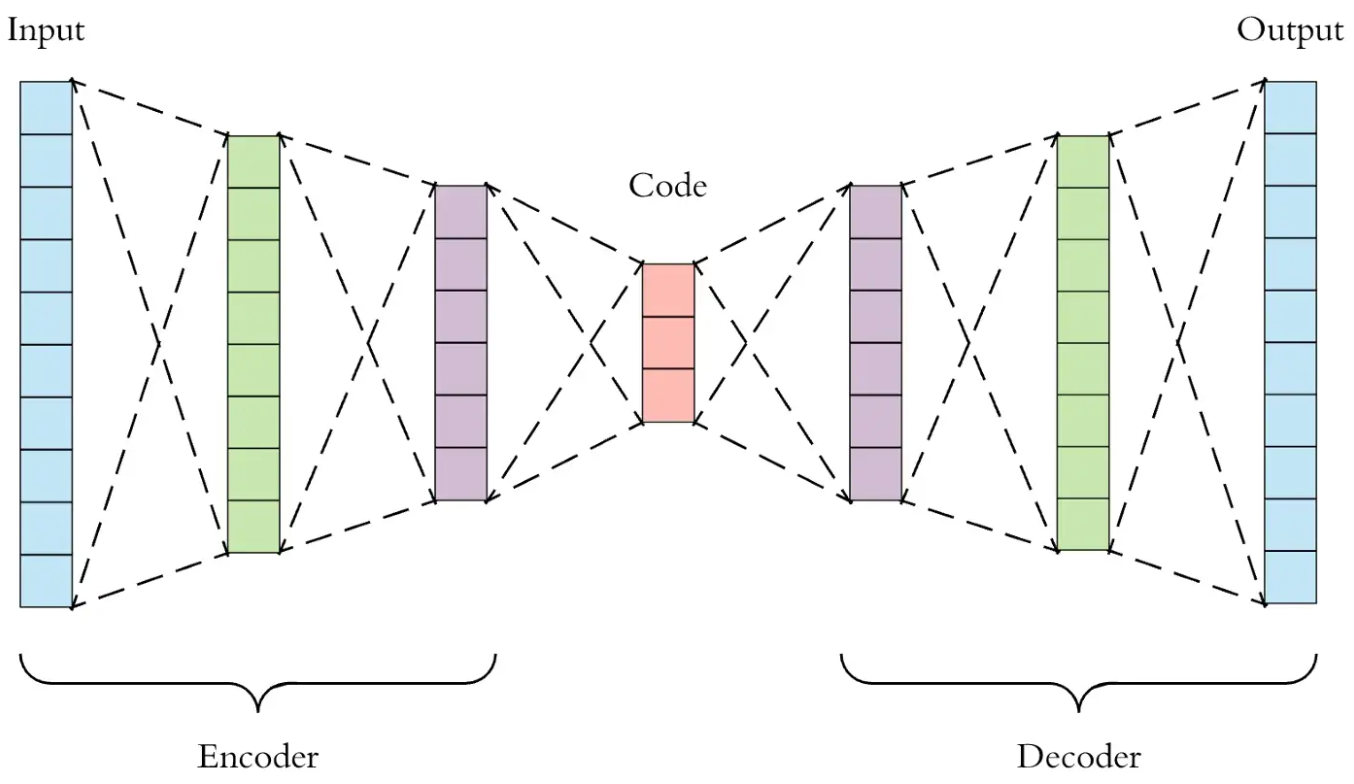

Variational Autoencoder: learning an underlying distribution and generating new data

Constructing an autoencoder that learns the underlying distribution of the input data, generated from a multi-dimensional smooth function f=f(x_1,x_2,x_3,x_4). This can be used to generate new data, sampling from the learned distribution

Autoencoder represents a multi-dimensional smooth function

Setting up a simple Autoencoder neural network to reproduce a dataset obtained by sampling a multi-dimensional smooth function f=f(x_1,x_2,x_3,x_4). As an example I’m using a disc+halo rotation curve model where both components are described by 2-parameters circular velocities

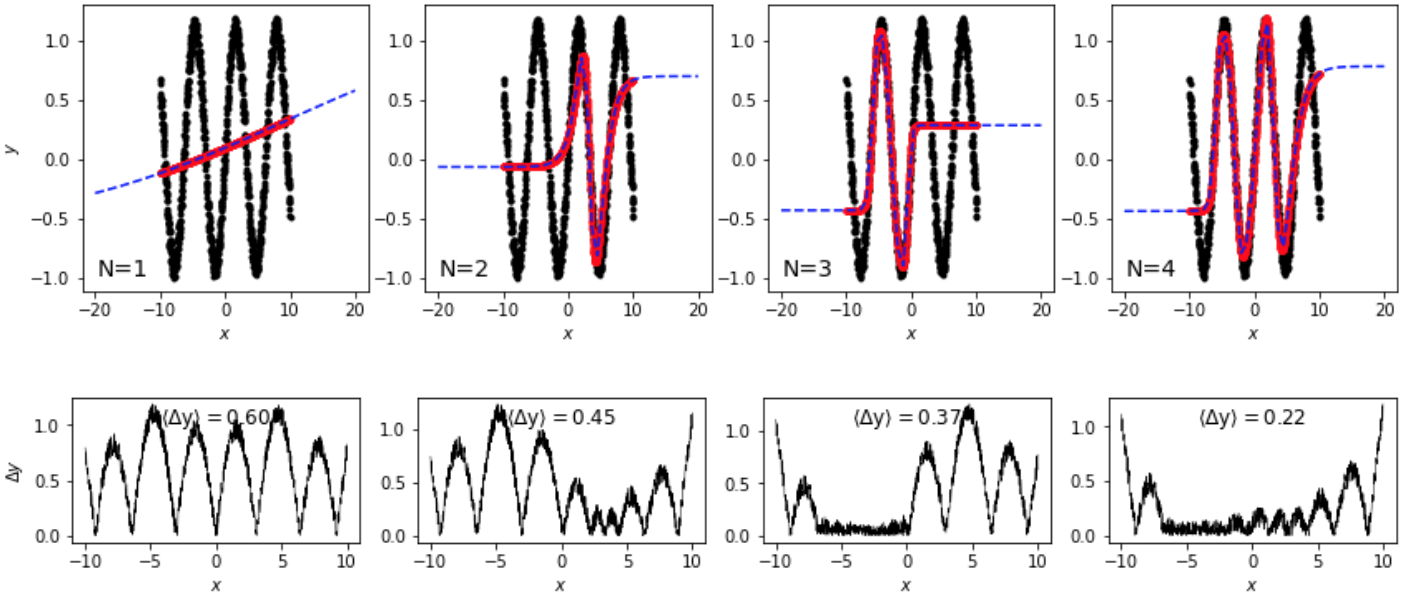

Understanding how basic linear NNs handle non-linearities

A deep exploration, an visualization, of how a single-layer linear neural network (NN) is able to approximate non linear behaviours with a just handful of neurons and the magic of activation functions.